|

|

In our laboratory, we are conducting joint research with professors in the Faculty of Medicine and Pharmacy to develop next-generation education systems and drug navigation apps for foreigners. It is possible to perform real-time animation of the shape of the lungs of an actual person on a computer and the movement during inspiration and exhaust using a virtual reality VR system. Why does such a sound occur by arranging the necessary sound information in various places at that time? We will help you understand why you should put a stethoscope on it.

The following is an example of the screen of a next-generation educational inquiry system that operates interactively by voice, such as "Please take a deep breath."

Click the above to display a VR image of the data obtained by scanning the real lungs, heart, and aorta in a web browser. (The next screen will show that WebGL is not supported, but if you press OK it will usually display fine. Voice recognition is not supported) .

Visually impaired people are in trouble at intersections and pedestrian crossings because most of the traffic lights do not make any sound and they cannot tell whether they are blue or red.

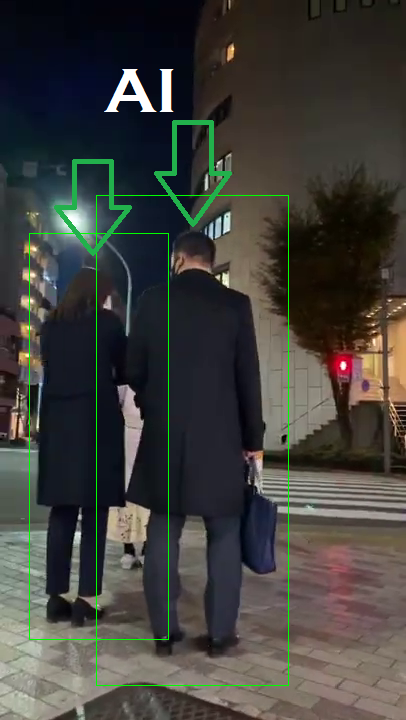

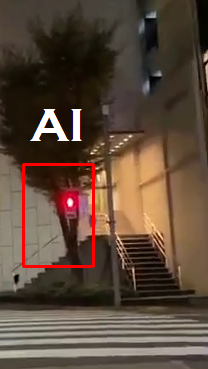

Our laboratory is developing a system that recognizes pedestrian signals with "artificial intelligence: AI" and conveys them to the visually impaired in collaboration with external organizations. The following image shows the results of recognizing humans and pedestrian red lights.

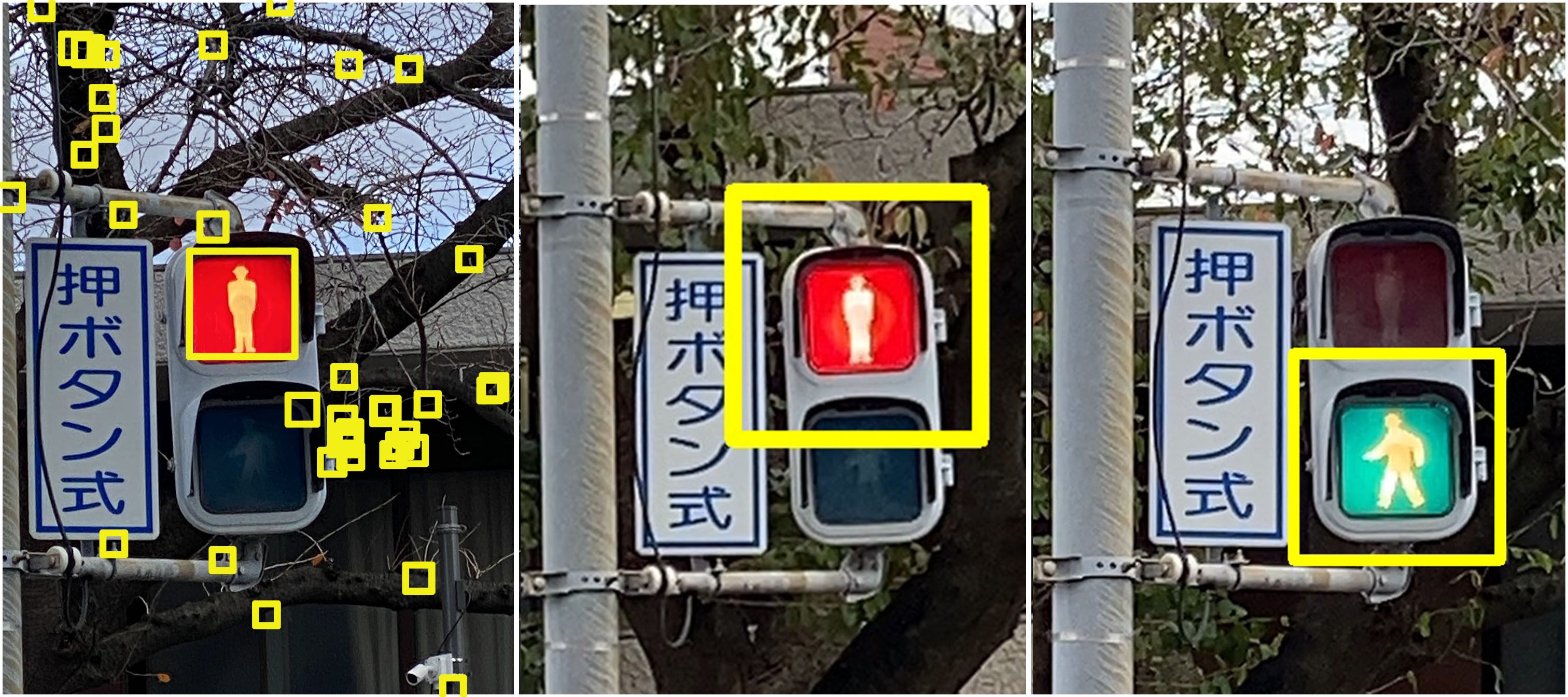

The above result is the result of recognizing based on the color information of the signal on the leftmost side, and it is the situation that all the places where the red component is mixed other than the red signal are recognized. If you use a cascade classifier and YOLO(You Look Only Once), which is one of AI / machine learning, to learn the shape of a traffic light using many images, you will be able to recognize the signal correctly as shown on the right.

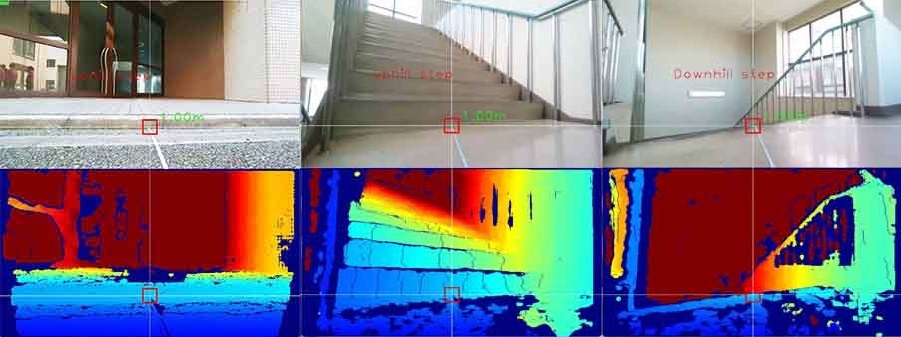

The lower image applies a technology that calculates distance using two left and right stereo images. This is the result of recognizing steps that are dangerous for the visually impaired. Red indicates far away and blue indicates close distance. The center of the square is the point at a distance of 1m from the camera on flat ground without steps. The step is recognized by reading the gradient of the distance due to the step from the distance from the camera here.

The following is a part of the system screen that makes it easier to grasp the whole picture by displaying the MRI image with animation.

Click the above to display a part of the system in your web browser.

The figure below is a drug navigation app for smartphones at pharmacies for foreigners. For example, when we go abroad and get sick, all the products we don't know when we go to the pharmacy, there is no Japanese even if we look at the notation, and English is all technical terms, so which one should we buy? You will be in great trouble. This is a problem that foreigners are feeling in Japan, so we are co-developing smartphone apps to solve this problem with teachers and students in the Faculty of Pharmacy.

Papers

|